The Cloud File Storage platform utilizes local SSD caching on the vGateways to improve performance on the 'active' set of data. This allows for a highly scalable, dynamic, and cost effective solution leveraging Expedient's Cloud Object Storage for bulk data storage.

Cache Behavior

Metadata

An up to date copy of the metadata for a Global File System is always cached on the local vGateway. This occurs without user intervention as the operation is managed by the Portal. Changes made to metadata are updated on the local vGateway, uploaded to the portal servers, and in the scenario of multiple vGateways are pushed to any other systems joined to the Global File System. If a new filer is deployed and configured, the first actions it will take is to pull down the latest copy of the metadata of the Global File System from the Portal infrastructure.

File Stubbing

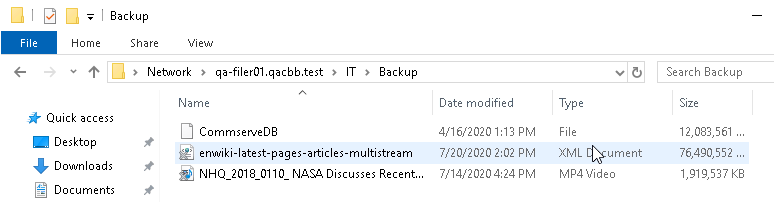

All data contained within the Global File System is online and accessible at any time (assuming the filer has not become disconnected from the internet). While the data may not be located locally in cache, the data can always be accessed and will appear in the file system. The 'placeholder' files downloaded in the metadata and displayed on the vGateway are known as file stubs. Access to these files may be delayed as the data is pulled from the Global File System into the local cache of the filer. Data that is locally cached on a vGateway will have no icon, while data not in cache and represented as a file stub is denoted with an 'X' icon.

In the below screen shot the 'CommserveDB' file is located locally within the vGateway cache. As the remaining files are denoted with an 'X', these are not cached locally but remain available through the Global File System.

Cache Pinning

If it is desirable to have a file always located within the local filer cache, a specific folder can be configured to be pinned within the cache. Enabling a folder to be pinned in cache will immediately trigger the data to be pulled into cache from the Global File System and it will be ignored in any eviction processes.

Extreme caution should be taken when pinning workloads to cache. Pinning sets of data to cache will no longer allow for the space the pinned data utilizes to be used for intelligent caching algorithms. This will likely lead to performance issues as the cache has now become undersized. Additionally pining a folder to cache whose volume is larger than the available cache can cause sync issues between your vGateway and the Portal. Unless your Solutions Architect or Sales team have sized your solution to accommodate a pinned workload, it is recommended to contact Expedient Support or Sales staff before performing any cache pinning operations.

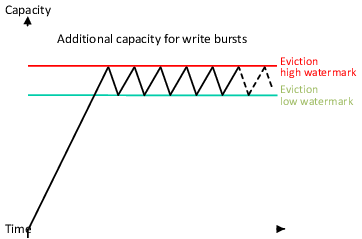

Cache Eviction

The local cache of a vGateway will continue to store data until the drive used for caching becomes constrained for space, this is true regardless of how 'hot' or 'cold' the data is. The point at which the local system begins to stub files and remove them from cache is known as the High Water Mark. The value at which the High Water Mark is reached and data is removed from cache is 75% of the cache volume. Once the cache drive reaches 75% utilization, the local filer will begin the stubbing of files starting with the files Least Recently Used. This process will continue to remove data from cache until the Low Water Mark is reached at 65% utilization.

This process will continue to run within the boundaries of the cache size and the High and Low Water Marks to ensure that the most recently used subset of data remains in local cache. As previously discussed, metadata and folders that are pinned to cache will not be evicted by this algorithm. Furthermore, any files that are currently open by an end user or service will be ignored by the caching algorithm.

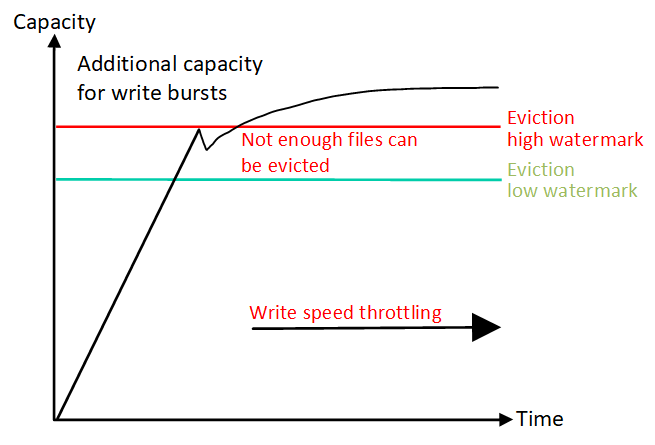

Cache Constrained Based Throttling

While uncommon, it is certainly possible for the local cache to become overwhelmed and not contain enough free space for new data to be loaded onto the filer. If the High Water Mark of the cache is surpassed, IO will not be stopped but rather will be throttled at the speed of the WAN link. This ensures that data will remain consistent in the Global File System by writing or reading directly to the back end storage.

This is most commonly seen during initial migrations into the system as data can be ingested locally quicker than can be uploaded or when the cache has been undersized for the workload and a large write/read operation is performed. This can also be seen when too much data has been pinned to cache (as discussed above), which allows for less 'free space' to be utilized for the working set of data. Even when a caching gateway has reached it's high water mark there remains 25% free space for these new writes before throttling occurs, this is why it is an uncommon scenario outside of the scenarios discussed above.