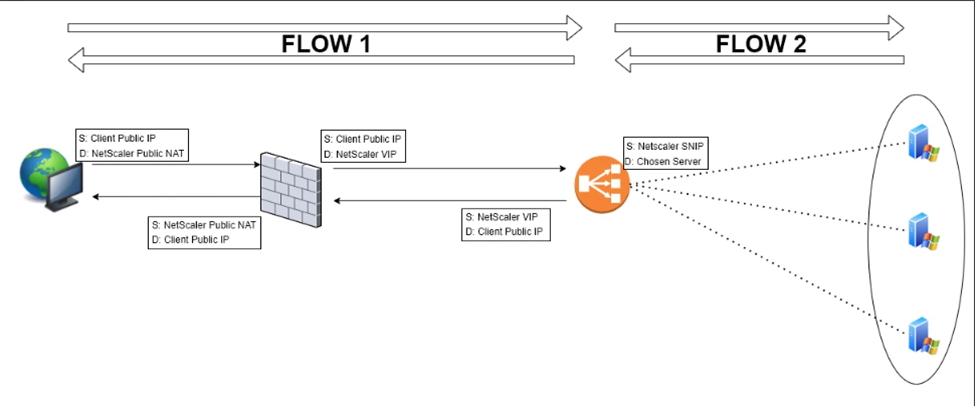

The information below provides a typical scenario for utilizing an Expedient-managed load balancer solution.

The flow steps are explained below:

The client navigates to the Public IP of the load-balanced server group. Typically this is mapped to a public DNS record.

As the request reaches the firewall (if applicable), it is evaluated against policies and, if permitted, a session will be created on the firewall and the destination address is NAT translated to the destination IP of the load balancer VIP.

The load balancer VIP, known as a "Virtual IP," is a virtual server that lives on the load balancer itself. (Note that this is where Flow 1 pauses in this outline).

The load balancer VIP is mapped to a "Service Group" which contains one or more servers. The load balancer determines which server should receive the request based on the configured load-balancing method (by default this is "least connection", but can be "round robin", "response time", etc...).

The load balancer then starts a new flow with the source IP of the load balancer's SNIP* and the destination IP of the back-end server which was selected to receive the request.

The server responds to this request made by the load balancer. This completes Flow 2.

The response that was provided by the server in Flow 2 is then copied and provided back to the client by the load balancer via its VIP, as the return traffic for Flow 1.

The firewall (if applicable) NAT translates the source IP address back to the load balancer's public IP and forwards the traffic back to the client.

SNIP: Apart from the load balancer having management IPs, and VIPs, it must also have a Subnet IP (SNIP) for each subnet in which it has back-end servers. This IP is used as the source IP for Flow 2. The servers respond to this IP and are unaware of the original client's IP address (by default).

Muxing: By default, the load balancer is "muxing" connections from the clients to the servers. This means one TCP session is created for each back-end server, and whenever the back-end server is selected based on the load-balancing method, the load balancer uses that existing TCP session. In an example of only two back-end servers, one hundred client TCP connections are made to the VIP, but the load balancer uses a single (or small number of) TCP connection(s) to Server A and the same for Server B. The one hundred inbound sessions are muxed to a small number of connections between the load balancer and its back-end servers. This can save significant bandwidth as overhead is reduced with fewer TCP sessions between the load balancer and the servers.